Facts:

AI

is running outhas ran out of training dataFoundational model companies are willing to pay extra to creators for their unused footage.

Content creators are receiving payments ranging from $1 to $4 per minute of footage with higher rates for higher quality videos like 4K quality or specialized formats like drone captures and 3D Animation.

While creating content, content creators often take hours of footage that never makes it to their fan base or audience. In most cases, this content remains unused or deleted. By selling the unused footage to AI companies, creators can generate revenue beyond advertising with brands or payment from the platforms they post on.

But where does the actual personal content reside?

Your phone.

And that’s what OpenAI has managed to get access to (almost like the trojan horse that led to the fall of Troy).

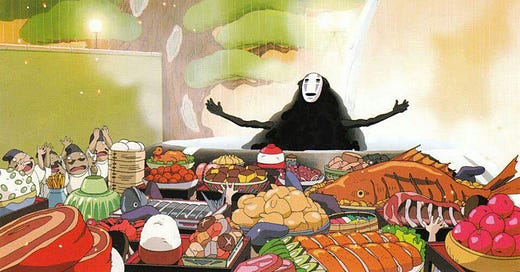

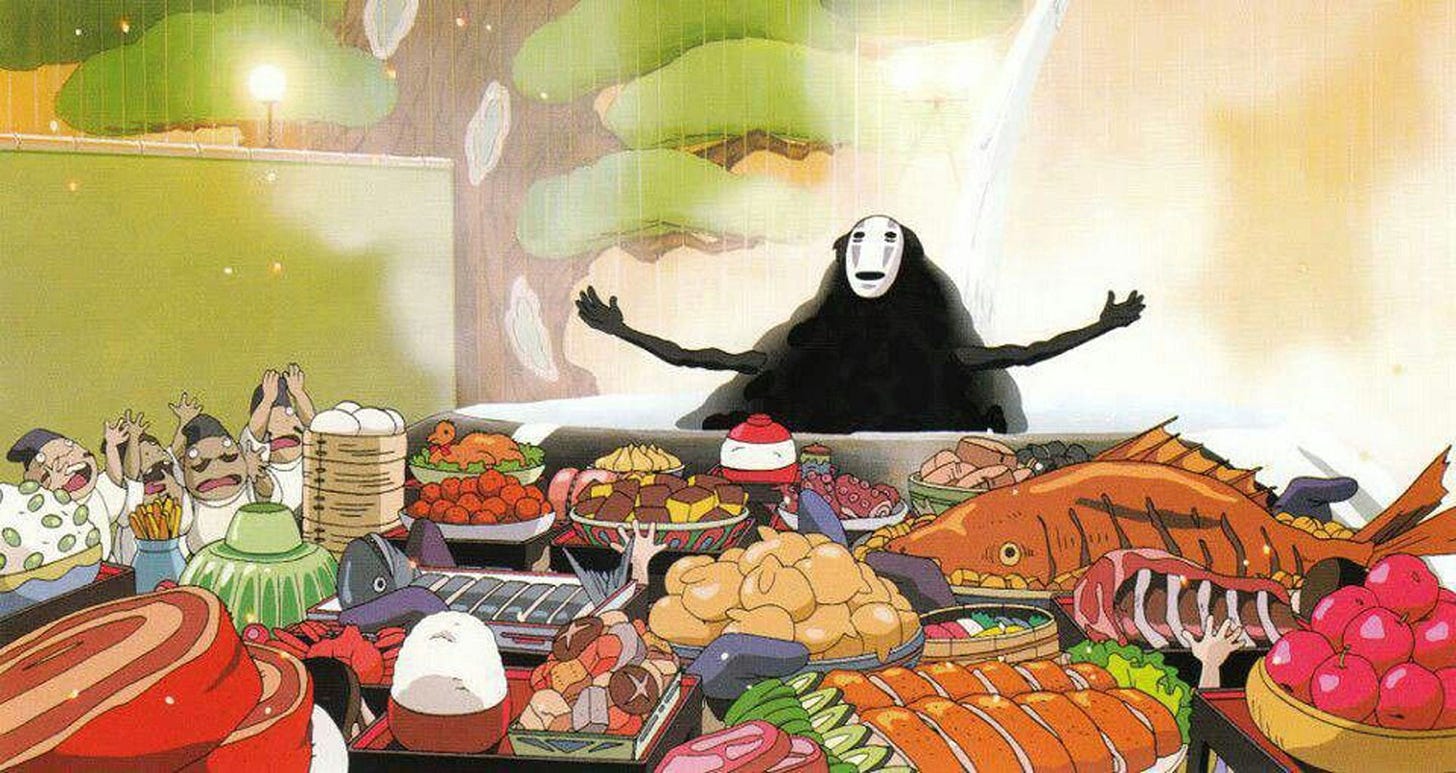

Thanks to the Ghibli studio trend, more than a million users have signed up for ChatGPT (and playing Ghibli-Ghibli). And as Sama says - the servers are melting.

I am betting that most of these are personal pics, the ghibli version of which are being shared on WA groups - for fun purposes.

And in the process, OpenAI is actually getting a lot of data to train its models on.

But.what about ChatGPT’s privacy rules?

Well, what’er you upload gets used by OpenAI for training purpose.

As simple as that.

Of course, you opt out of OpenAI’s training on your personal data (go to settings..blah blah), but we all know 99.99% users won’t even bother about this.

Plus, by uploading their personal pics voluntarily, the EU privacy rules are also taken care of as this is now considered a voluntary process!

Mo data. Mo moat.

More data means better realism and diversity in AI-generated images - a huge win for the ones who get access to people’s personal photos.

The AI would learn artistic styles more deeply, making outputs feel more authentic.

It could create better domain-specific outputs—Ghibli-style images, photorealistic renders, anime, etc.

A richer dataset could enhance AI’s ability to describe images or generate text based on visuals.

It would make AI better at context-aware generation (e.g., generating an image based on a complex story prompt).

Smart move, eh? Kudos to the productgeek who actually did this (?).

What next? I am guessing:

A feature for users to upload their out-of-rhythm singing and get a melodious version?

A feature for creators to upload their unused videos and get a storyline out of it?

What’s your take? What are you Ghibling?